Grasping the future of machine learning and cloud robotics with Ken Goldberg

Meet Ken Goldberg, the UC Berkeley engineering professor whose machine-learning research is laying the groundwork for a new era of intelligent robots.

It’s been 15 years since iRobot debuted its Roomba Vacuuming Robot—so where’s the robot for emptying the dishwasher and folding laundry? Many robots can handle tedious tasks, but so far, they need extensive practice to sense and grasp unknown physical objects. Enter a new innovation using cloud-connected robots that just might jump-start machines’ ability to learn manual tasks.

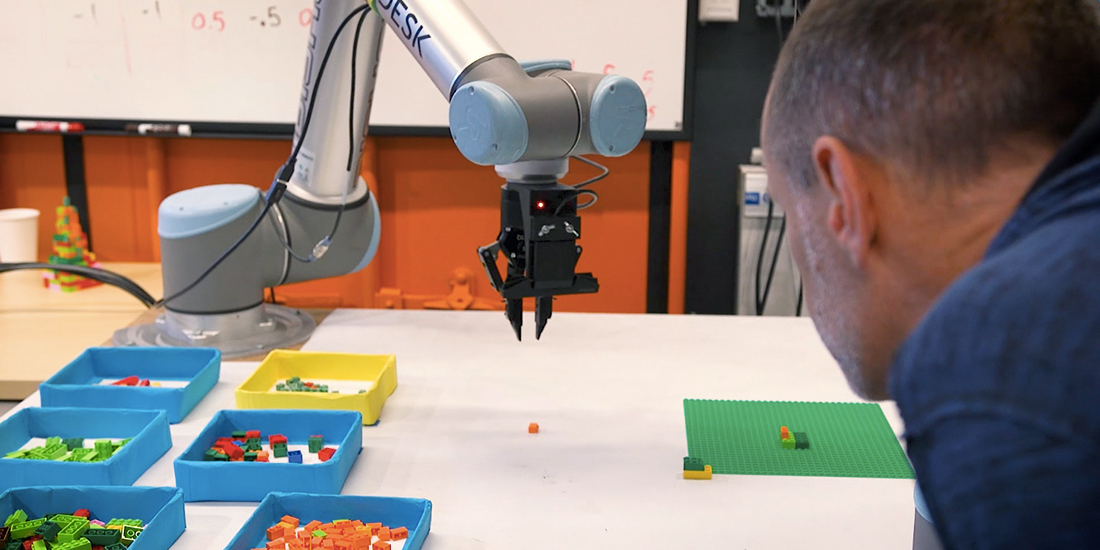

UC Berkeley Distinguished Chair in Engineering Ken Goldberg and his PhD student Jeff Mahler recently published results from Dex-Net 2.0, a research project in which they trained robots connected to a deep-learning neural network to successfully grasp novel objects. Rather than endless physical experimentation with objects, the robots learned from a synthetic data set of 6.7 million point clouds—examples of grasps with 3D coordinates representing an object’s surface. Because cloud connectivity offloads the memory and processing power from a single robot to the network, using such a huge data set becomes more practical, as does sharing the data and results with other cloud-connected robots.

“We’re finding that we can bootstrap machine learning with synthetic data sets,” Goldberg says. “The key seems to be statistical sampling to identify grasps that are robust to small errors in robot position.”

He should know, too. Goldberg cofounded the Berkeley Artificial Intelligence Research (BAIR) Lab and has worked in robotics for more than two decades. His 1995 art installation The Telegarden, in which remote collaborators could plant and water seedlings with a robotic arm, was the first Internet-connected robot.

Today, robotic arms possess incredible precision with preprogrammed functions but struggle with adapting to unknown objects. They can weld car parts but might fail at grasping a new part or regrasping a known part for assembly.

That’s why Dex-Net 2.0, a project of UC Berkeley’s AUTOLAB, is a potential boon for commercializing cloud robotics. With the refined ability to learn from huge synthetic data sets on a network, combined with positive feedback loops coming from the shared results from the cloud, more dexterous robots could rapidly pack items from a warehouse for shipping, sort and declutter objects in machine shops and households, assemble products, and so on.

Dex-Net 2.0’s results came from a combination of neural-network machine learning and statistical reasoning about the shapes of thousands of handheld objects and parts—anything from a toy shark to a screw-top lid. The network crunched its data sets with statistical probabilities to predict which physical items it could grasp using standard robotic parts and an off-the-shelf 3D sensor. When it was 50 percent or more confident that it could grasp something, it succeeded 99 percent of the time.

These results are exciting for both robotics and machine learning, which is why Goldberg and Mahler are sharing some of the Dex-Net 2.0 data. Open source—sharing code, designs, and other data—is one of Goldberg’s five elements of cloud robotics. Data sharing over the cloud enables another element—robot-to-robot learning—so that one bot encountering a novel object in a factory, warehouse, or private household can upload new data to help other robots.

Such benefits to cloud robotics also come with some concerns, such as privacy, security, network reliability, and a particular one for designers: intellectual property. “You obviously don’t want your robot to be broadcasting everything that’s going on in your house, in an operating room, or in a factory for that matter,” Goldberg says. “As soon as your robot is on the cloud, somebody somewhere can hack into it. It’s important to have our eyes open, not be Pollyanna about this and say, ‘The cloud’s going to solve all the problems.’ It also introduces problems. I don’t think they are prohibitive, though.”

Inevitably, some element of the Wild West balances on the edge of technological progress. Goldberg says that the intellectual-property issues of putting 3D models of copyrighted items on an open-source cloud haven’t been fully addressed yet. Some of Dex-Net 2.0’s 3D models derive from copyrighted designs, but it is impossible to reverse-engineer an object shape from the deep-learning network, so he doesn’t believe that copyright owners are at risk.

Goldberg and Mahler are now extending Dex-Net to the next level of self-learning, so when it does make a mistake, it can analyze failure modes to figure out a solution. They want to go from 98 percent accuracy with known objects to 99.999 percent; add regrasping for different purposes, such as assembly; and make Dex-Net more proficient at picking up objects out of clutter and piles. When things are piled up, robots currently have trouble sensing the edges and dimensions of where one thing ends and the other begins. The new version should have the ability to push and separate objects from a pile.

“Children constantly drop things and pick them back up,” Goldberg says. “They are learning by active exploration. You can watch someone playing tennis all day; then, someone hands you the racket. Are you going to be able to play? No. You have to start playing, and then you learn.”

For a cloud robot to teach itself to recognize and pick up new objects at the human level, Goldberg believes it will take a combination of analytical reasoning and data-driven deep learning. “I want to argue for the importance of analytic models that scientists developed over hundreds of years,” he says. “There’s a trend now to throw those out the window and just use data-driven deep-learning models. It’s a mistake to throw out the analytic. The answer is to combine the two.”

The idea of machines that teach themselves gave rise to the technological singularity concept. If humans create a machine smarter than themselves, then that machine could create a new AI smarter than it and so on, past the point of all predictability. Many predict the worst from such a development, and some, the techno-utopians, predict the best.

Goldberg, however, is a “skeptimist.” He’s skeptical of both the doomsday scenarios and the utopian hype surrounding AI—but he’s also optimistic for human-machine synergy to improve lives. He proposes “multiplicity,” as an alternative to singularity, in which diverse groups of humans ask important questions and then work with diverse groups of machines to make the best decisions.

“I can’t predict exactly the way things will unfold, but the level of industry interest right now is unlike anything I’ve seen in 30 years,” Goldberg says. “I’m convinced that a sufficiently diverse group of machines will always learn to make better decisions than a single machine working alone.”

About the author

Markkus Rovito

Markkus Rovito joined Autodesk as a contractor six years ago and joined the team full-time as a content marketing specialist focusing on SEO and owned media. After graduating from Ohio University with a journalism degree, Rovito wrote about music technology, computers, consumer electronics, and electric vehicles. Since his time with Autodesk, he’s developed a great appreciation for exciting emerging technologies that are changing the world of design, manufacturing, architecture, and construction.