The Dynamic Robotics Systems Lab and an industrial design team from the New Jersey Institute of Technology have created TOCABI. TOCABI is a humanoid robot that humans can control from thousands of miles away. Powered by the cloud collaboration tools and design capabilities of Fusion 360, the teams worked together seamlessly despite a 12-hour time difference and a pandemic.

The Power of Remote Robotic Avatars

Eliminating daily face-to-face interactions with our friends, peers, and colleagues during the COVID-19 pandemic has put significant limitations on our well-being and productivity. However, this collective experience, though brutal, has spurred a plethora of brand new ideas and incredible innovations. For instance, what if you could precisely control the movements of a powerful robot in real-time from hundreds or thousands of miles away?

The applications of this idea are exciting. Imagine a fleet of rescue robots providing life-saving help in a disaster area too dangerous for human relief workers. Or an expert with rare skills providing their specialized services anywhere in the world, instantly. A digital twin comes to life through hardware.

This futuristic vision is why the XPRIZE Foundation, dedicated to inspiring industry breakthroughs, is funding a $10M prize for robotic avatars. One of the semifinalists is TOCABI (Torque Controlled Compliant Biped ), a full-size humanoid avatar developed by the Dynamic Robotics Systems Lab (DYROS). DYROS is a research lab in South Korea led by Professor Jaeheung Park. DYROS teamed up with an industrial design team from the New Jersey Institute of Technology (NJIT). Both teams used Autodesk Fusion 360 to collaborate on this fascinating project.

An Eight-Year Collaboration

One of Professor Park’s primary collaborators is Mathew Schwartz, an assistant professor at NJIT and a former research scientist in the DHRC. Their areas of expertise include the connection between computational design and human factors, human movement analysis with motion capture, and robotic systems for manufacturing.

“Back in 2013, Professor Park asked me to design some plastic casings to cover the robot’s legs,” Schwartz says. “I saw a more exciting opportunity to integrate the structure with the design. At the time, the idea of totally organic and curved shapes on humanoid robots was rare because low tolerances in machining could complicate the problem of transferring simulation to reality. But with my experience in advanced manufacturing, I knew it was possible.”

Getting the team on board with his idea was “a struggle.” However, the Atlas robot from Boston Dynamics debuting with curved pipes around its legs sealed the deal. The team focused primarily on the avatar’s legs from 2013 until 2015, then moved on to the upper body. For Schwartz, the project’s intent has always been to improve robotics research with a platform to help researchers hone their ideas about motors, joint configurations, and control theories. The idea to use the robot as an avatar came about relatively recently when XPRIZE announced its competition.

“I am most proud of the robot’s design,” Schwartz says. “Many people assume an avatar is a simple mapping of human movements to robotic ones, which is technically true. But it doesn’t capture how advanced TOCABI is. We built it to move autonomously, balance and walk independently, plan and execute tasks, and many other features. The avatar demonstrates the control aspect, which could be done on a tablet device or through a more immersive experience, as we’ve shown here.”

Advantages of Torque-Control

Humans can control robots in a variety of ways. TOCABI uses a torque-control system that determines where it needs to move before calculating and commanding the necessary energy to the motors before movement. This approach is much safer for human-robot interaction than the traditional methods of control used in manufacturing. A robotic arm moves to a pre-determined position no matter what, presenting serious risks for any human standing in the way.

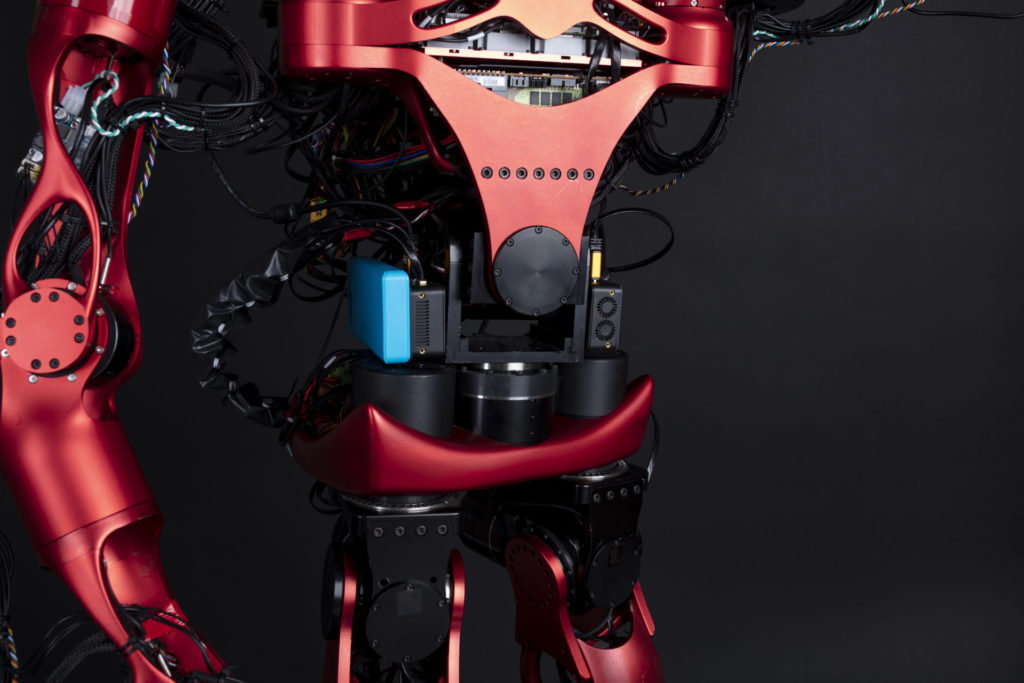

In addition, TOCABI is 1.8 meters tall, weighs 100 kg, and operates with 33 degrees of freedom. Of course, all of this was possible by years of intense collaboration. The teams were able to collaborate seamlessly despite a 12-hour time difference, thanks to Fusion 360.

“Professor Park and I have worked as an interdisciplinary team for eight years,” Schwartz says. “We have combined aesthetic ideas and industrial design techniques to overcome the real-world challenges of engineering, manufacturing, research, prototyping, and development. Fusion 360 helped make that possible with its unique combination of organic modeling and cloud collaboration.”

Collaborating with Fusion 360

The TOCABI team includes over 20 people. In addition to Professor Park and Schwartz, lead engineer Jaehoon Sim, software team leader Junewhee Ahn, and systems integration team leader Suhan Park are all part of the team (among many others).

“Our robot is built from the ground up,” Schwartz“explains. “We make the motor housings and try multiple versions of designs, switching between ideas quickly. But as a research collaborative that includes many university students who are coming and going, managing our projects is very challenging. We use Fusion 360 to track what everyone is working on.”

Fusion 360 helps the TOCABI team manage version control, get update notifications, and merge new parts as they become available. Much of this work occurs over video conference to ensure teams are working on the correct version and that everyone receives notifications when others make decisions. Fusion 360 and its cloud capabilities helped streamline this process.

“The best example is the robot’s head, which we redesigned and implemented within two months of the XPRIZE deadline,” Schwartz says. “We put the head shape through multiple iterations, each of which had to attach to the neck joint. We also updated the face multiple times and the video cameras used to display the environment to the operator. All these decisions were happening simultaneously, so a centralized, integrated design platform was key for meeting our deadline.”

An Integrated Tool That Gets the Job Done

Fusion 360 also helped the team accurately model organic shapes, especially in instances that required very high tolerances, such as the motor casing connections.

“Fusion became a truly interdisciplinary platform for us, where the engineering and design teams both felt comfortable using it,” Schwartz says. “We wanted to suggest a human form, but of course not make it identical. Motor constraints made this impossible, but the nearly unconstrained organic modeling let us apply curves and references to a human form, making our robot look like something people expect to move like a human.”

Integrating multiple features in one collaborative platform also enabled the TOCABI team to create a tight feedback loop. They could define motors and joint locations in one place and the linkages using that information in a separate location. In the same way, the team improved communication of design and engineering requirements with the simulations tab.

“Once we set up our structural simulations, it was easy to discuss the required safety factor and redesign and analyze iteratively,” Schwartz says. “Sometimes, we would use topology optimization to explore our decisions. Across the center of the robot, there is a split bar connecting both arms. It sits on the torso and is vital to overall strength. We originally designed it intuitively, but by making it solid and using topology optimization, we were able to validate our decisions.”

Formlabs SLA Printers Offered Critical Support

Of course, manufacturing a robot of this complexity is no small feat. To achieve such a highly sophisticated design takes massive amounts of work. Prototyping, testing, and validation cycles all played a critical role in ensuring everything came together correctly. To that end, the team at TOCABI found Formlabs’ SLA solutions to be instrumental to their success.

Designing the head, for example, is a super important thing to get right. Enabling the operator to get a proper sense of their surroundings through the robot surrogate and giving them the mobility they need to look around are hugely important. This process took some time and required many iterations along the way. 3D printing became both a prototyping and manufacturing tool for the team. “The cameras kept changing, but we didn’t have enough time since the CNC parts would take a few weeks to make,” Mathew shares. By shifting the design to a simpler machined bracket plate to hold the electronics, the vision team was able to keep ideating using Formlabs without impedance to experiment with multiple strategies before landing on their final design.

What’s Next for TOCABI?

Schwartz joined the South Korea team in Miami for the semi-finals of the XPRIZE competition. XPRIZE will announce the results soon. No matter what happens, Schwartz plans to continue developing his ideas of robotic control, most likely in collaboration with Professor Park.

“Due to the pandemic, we had to wait nearly two years for our two teams to meet,” Schwartz says. “When we finally did, the first words out of Professor Park’s mouth were, ‘We have a new idea for a robot. Are you in?’ Of course, I said yes.”

We can’t wait to see what team TOCABI does next. From additive manufacturing to its advancements in digital twin processes, TOCABI is not on the bleeding edge. It’s defining what the edge is.