Elevate your design and manufacturing processes with Autodesk Fusion

Cloud outages are bad. They are terrible. As much as we are passionate about our vision of a cloud-powered ecosystem for product development, none of that matters if we cannot provide world-class cloud services with world class security and reliability. The recent spate of outages have only reinforced this fundamental imperative. At every level of the company, we are seeing this as Job #1, yes, before new features, and are applying our best resources and efforts on this problem.

So, why is it so hard and what exactly are we doing about it, you may ask justifiably. In this post, I will attempt to answer those questions.

The first thing that is different about the cloud-based software from desktop-based software is that the cloud behaves in a non-deterministic way. This means that one piece of the software in the cloud cannot rely 100% on another piece of software in the cloud, to behave exactly the same way every time. Because cloud software operates on remote servers in a data center under variable conditions of load, network latency and other heuristic factors, each transaction with a cloud service is subject to a range of variability. Add to that the complexity of tens of inter-related services that typically power an application like Fusion 360 and we end up with a complex web, where each connection in the web is subject to some variation in reliability. All of this can manifest at the user level in varying degrees of performance.

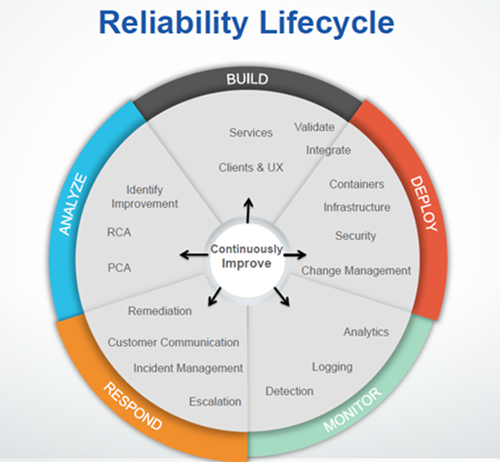

Ok, fine, but how do you then make a cloud-based application work reliably and consistently for the end-user? There are three parts to the answer:

1. Availability: First and foremost, every service in the cloud must be deployed and operated in a way that allows it to be available and performant under normal and abnormal load as well as when subjected to malicious attacks. This involves things like making sure that we have enough hardware resources to run the software, load balancing, fast networks, etc. We have dozens of people whose job is to do just that.

For example, one of the outages we had recently was caused by the fact that a database server did not have sufficient memory allocated to it and it degraded when faced with an unexpected spike in usage. We remedied this by bumping up the memory allocation and now we have our DBA experts auditing every database server to make sure that we are not exposed to this problem anywhere else in the system.

2. Resiliency: The idea of resiliency is that each layer of the cloud service stack must account for the possibility that some other layer it depends on may not perform at peak level every time and so it must have the ability to absorb any degradation in a dependent service. This capability has to be built into every layer of the software as well as the end-user experience. When this is done right, the net effect is that the user is rarely impacted by the heuristic nature of the cloud and when it does happen, the experience is as user-friendly as possible.

Recently, we had an outage when a critical data service went into a denial of service state because it was bombarded by an application with a flood of retries triggered by an unexpected error. We realized that we need to make sure that all communication with services must follow industry best practices in handling errors, so as not to cause retry flooding. We are building service communication SDKs with these patterns that will be used by all services and clients.

We are also auditing and refining the user experience of cloud workflows in the Fusion 360 itself to make sure that the application protects the user from loss of work and time. For example, we will make sure that if/when we have a data service outage, the user is gracefully taken to offline mode and then seamlessly returned to online mode when the service is restored. We intend to make that experience similar to how Outlook continues to function even when connectivity is compromised.

3. Remediation: Finally, we also need to account for the fact that sometime, hopefully very rarely, there will be an unexpected condition that causes a service to degrade or fail in such a way that all the built-in resiliency is not sufficient to protect the user. When this happens, we need to detect this condition very quickly and “fix” the problem as quickly as possible, so that the window of exposure to the users is minimized.

We are doing a couple of things here to improve our response and remediation time. First, we are refining our systems that detect any degradation or outage, so our cloud operations engineers are alerted as quickly as possible. Second, we are making sure that the right people are available at all hours in the right time zones to quickly triage the problem and get the system operational again as soon as possible.

And finally, as we continuously build, deploy and operate our cloud software, bringing new levels of functionality and quality to you, we will monitor all three aspects above and continuously improve on all three fronts, so that in the end, you the users never have to worry about any of this and can focus on what is important to you, getting your job done as smoothly as possible.

We are 100% committed to that goal and have several projects underway to get there as quickly as possible.

Hopefully, you will start seeing the needle move quickly towards that goal.